Goal

Future-proof public sector client’s data management processes (collection, sharing, use, storage and publication). Consolidate data assets from multiple source systems for analysts to gain better insights and enrich their analyses.

Role

- Developed and optimized AWS-based ETL processes: Designed and implemented 5 AWS Lambda functions and multiple AWS Glue ETL jobs, enhancing data ingestion and processing efficiency. This resulted in a 30% reduction in data pipeline execution time by automating data mapping, data asset initialization, and data preparation workflows.

- Implemented robust data sanitization and compliance measures: Enhanced data quality and regulatory compliance by debugging and extending AWS Glue ETL jobs to perform comprehensive data sanitization, personally identifiable information (PII) identification and redaction. This ensured all data handling adhered to GDPR standards, securing sensitive information across all datasets.

- Streamlined data migration and documentation: Authored high-level and low-level design documents and testing plans to ensure clear, maintainable code and reliable data migration processes. Developed 25 custom scripts for efficient data migration into AWS PostgreSQL, facilitating the seamless transition of critical data assets with minimal downtime.

Capability

Data-driven decision-making and reporting enabled via custom built:

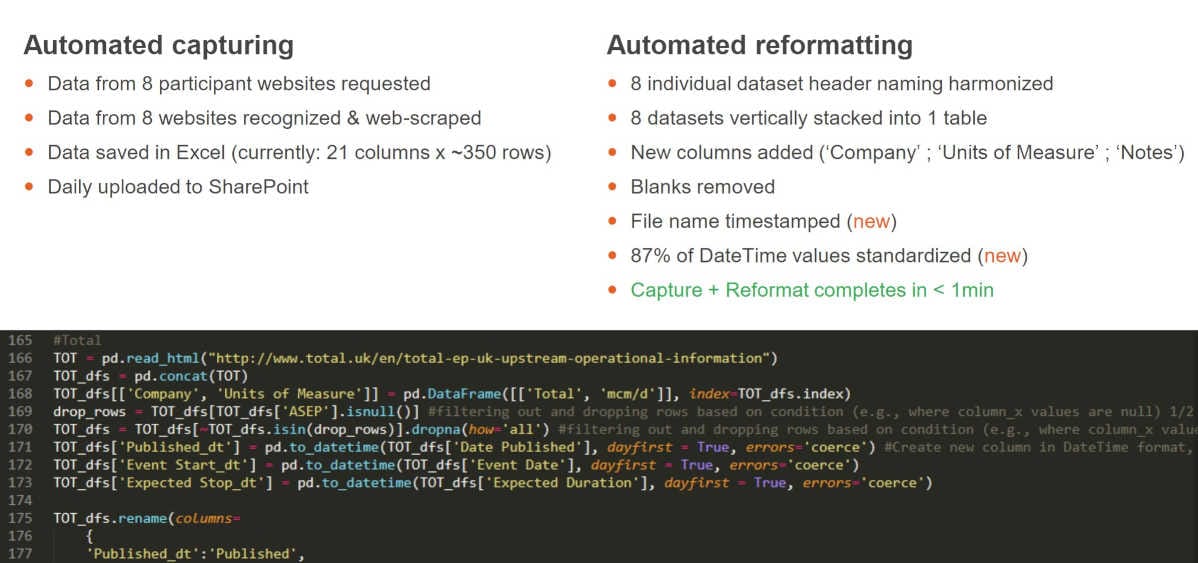

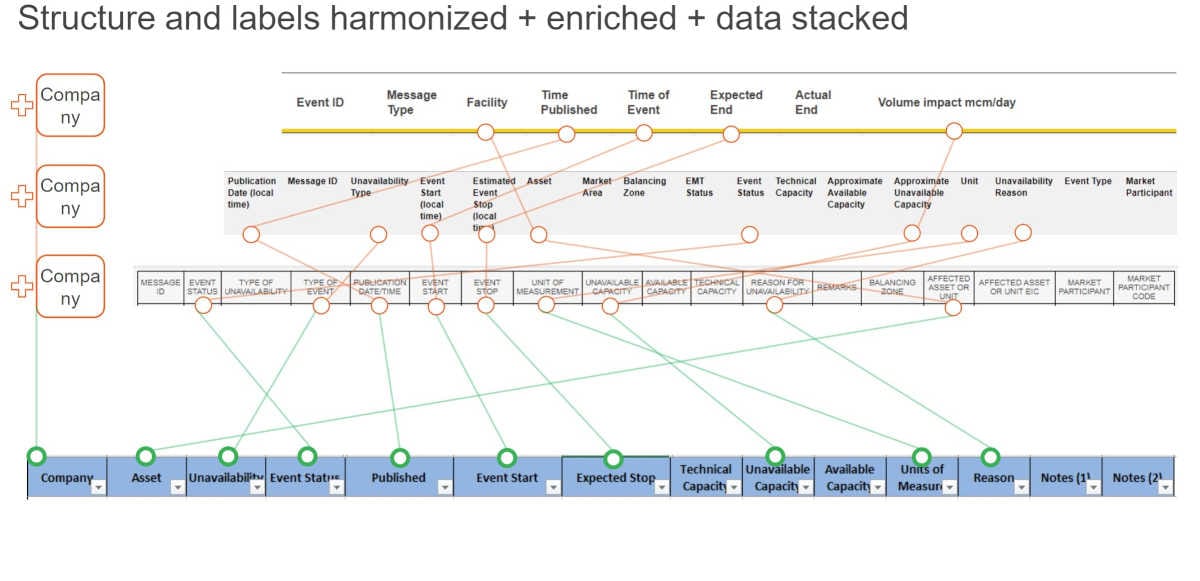

- Data Integration: Combined data from various sources into a unified data warehouse.

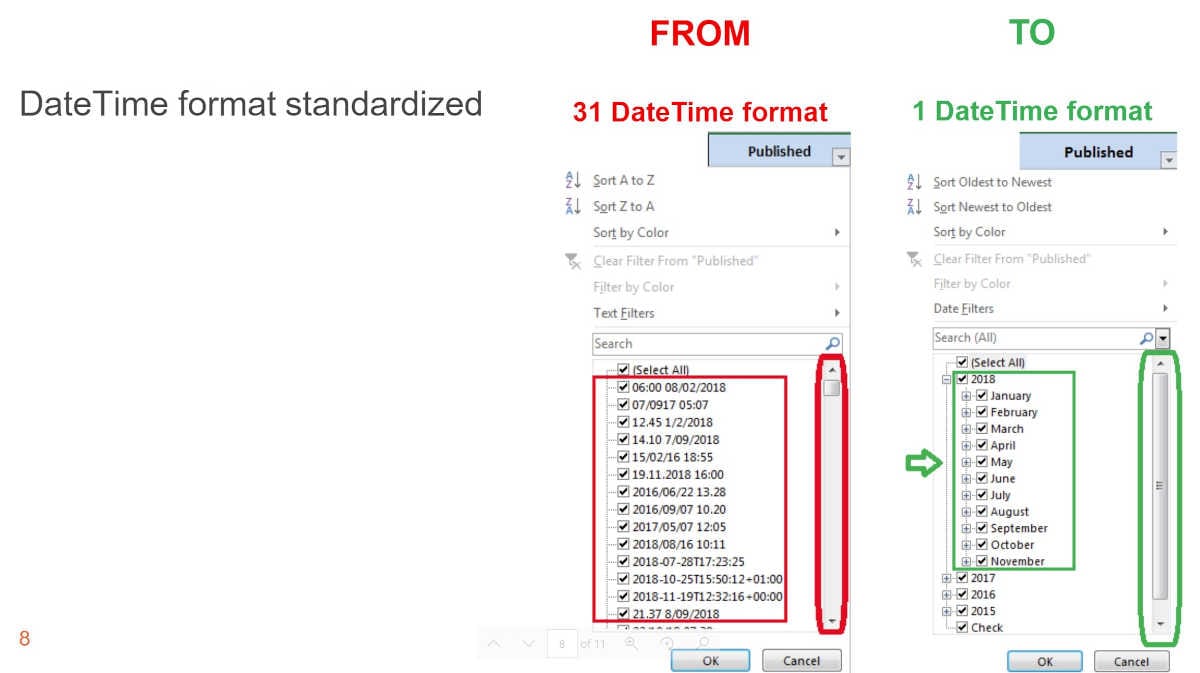

- Data Transformation: Standardised and sanitised data for consistency and accuracy.

- Data Accessibility: Provided a user-friendly interface for accessing and querying data.

- Reporting and Analytics: Enabled comprehensive reporting and analytics capabilities.

Stack

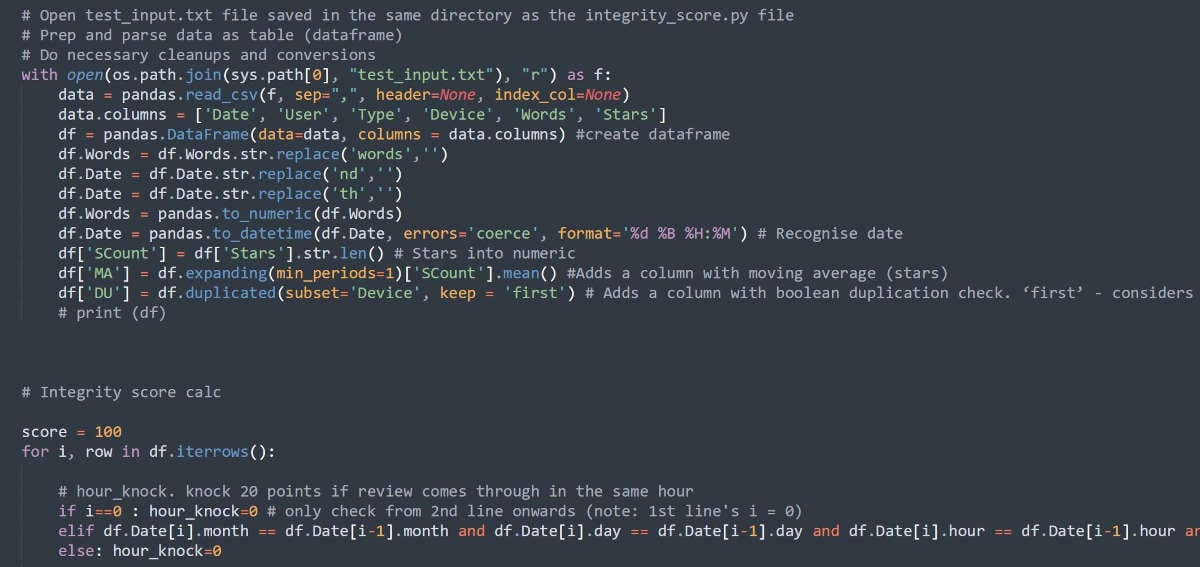

- Python – coding language for data pipelines

- SQL – DQL, DML, DDL

- AWS Cloud Services

- Aurora RDS – To host a data warehouse

- Glue – for data pipelines

- Lambda – serverless compute platform

- Step Function – workflow orchestrator

- S3 – cloud storage

- Comprehend – Machine Learning powered, continuoulsy trained Natural Language Processing (NLP) service. Used to identify and redact the personally identifiable information (PII).

- CodeCommit – for repository management

- CloudShell – browser-based CLI environment

- Apache Airflow – for orchestration of data pipelines using DAGs

- Docker Engine / Desktop – to create containers for airflow environment and postgresql instance

- DevOps – GitHub Actions, Terraform

- Git – for repository management

- Pycharm – IDE

- Atlassian Toolset – Jira / Confluence

- Sharepoint – content collaboration and workspace

- Mural – content collaboration / design

- Microsoft Visio – solution design

Year

2024